This is not a new post, it is based on Michael G. Noll blog about Running Hadoop on Ubuntu (Single Node)

I will go through the same steps, but I will point out some exceptions/errors you may face.

Because I am a very new user of Ubuntu, this post is mainly targeting the Windows users and they have very primitive knowledge about Linux. I may write some hints in linux which seems very trivial for linux geeks, but it may be fruitful for Windows users.

Moreover, I am assuming that you have enough knowledge about HDFS architecture. You can read this document for more details.

I have used Ubuntu 11.04 and Hadoop 0.20.2.

1. Installing Sun JDK 1.6: Installing JDK is a required step to install Hadoop. You can follow the steps in my previous post.

Update

There is another simpler way to install JDK (for example installing JDK 1.7) using the instructions on this post.

$sudo addgroup hadoop

$su - hduser

$ssh-keygen -t rsa -P ""

To be sure that SSH installation is went well, you can open a new terminal and try to create ssh session using hduser by the following command:

$ssh localhost

4. Disable IPv6: You will need to disable IP version 6 because Ubuntu is using 0.0.0.0 IP for different Hadoop configurations. You will need to run the following commands using a root account:

$sudo gedit /etc/sysctl.conf

I will go through the same steps, but I will point out some exceptions/errors you may face.

Because I am a very new user of Ubuntu, this post is mainly targeting the Windows users and they have very primitive knowledge about Linux. I may write some hints in linux which seems very trivial for linux geeks, but it may be fruitful for Windows users.

Moreover, I am assuming that you have enough knowledge about HDFS architecture. You can read this document for more details.

I have used Ubuntu 11.04 and Hadoop 0.20.2.

Prerequisites:

Update

There is another simpler way to install JDK (for example installing JDK 1.7) using the instructions on this post.

2. Adding a dedicated Hadoop system user: You will need a user for hadoop system you will install. To create a new user "hduser" in a group called "hadoop", run the following commands in your terminal:

$sudo addgroup hadoop

$sudo adduser --ingroup hadoop hduser

3.Configuring SSH: in Michael Blog, he assumed that the SSH is already installed. But if you didn't install SSH server before, you can run the following command in your terminal: By this command, you will have installed ssh server on your machine, the port is 22 by default.

3.Configuring SSH: in Michael Blog, he assumed that the SSH is already installed. But if you didn't install SSH server before, you can run the following command in your terminal: By this command, you will have installed ssh server on your machine, the port is 22 by default.

$sudo apt-get install openssh-server

We have installed SSH because Hadoop requires access to localhost (in case single node cluster) or communicates with remote nodes (in case multi-node cluster).

After this step, you will need to generate SSH key for hduser (and the users you need to administer Hadoop if any) by running the following commands, but you need first to switch to hduser:

$su - hduser

$ssh-keygen -t rsa -P ""

To be sure that SSH installation is went well, you can open a new terminal and try to create ssh session using hduser by the following command:

$ssh localhost

$sudo gedit /etc/sysctl.conf

This command will open sysctl.conf in text editor, you can copy the following lines at the end of the file:

#disable ipv6

net.ipv6.conf.all.disable_ipv6 = 1

net.ipv6.conf.default.disable_ipv6 = 1

net.ipv6.conf.lo.disable_ipv6 = 1

You can save the file and close it. If you faced a problem telling you don't have permissions, just remember to run the previous commands by your root account.

These steps required you to reboot your system, but alternatively, you can run the following command to re-initialize the configurations again.

$sudo sysctl -p

To make sure that IPV6 is disabled, you can run the following command:

$cat /proc/sys/net/ipv6/conf/all/disable_ipv6

The printed value should be 1, which means that is disabled.

Running an Example (Pi Example)

These steps required you to reboot your system, but alternatively, you can run the following command to re-initialize the configurations again.

$sudo sysctl -p

To make sure that IPV6 is disabled, you can run the following command:

$cat /proc/sys/net/ipv6/conf/all/disable_ipv6

The printed value should be 1, which means that is disabled.

Installing Hadoop

Now we can download Hadoop to begin installation. Go to Apache Downloads and download Hadoop version 0.20.2. To overcome the security issues, you can download the tar file in hduser directory, for example, /home/hduser. Check the following snapshot:

Then you need to extract the tar file and rename the extracted folder to 'hadoop'. Open a new terminal and run the following command:

$ cd /home/hduser

$ sudo tar xzf hadoop-0.20.2.tar.gz

$ sudo mv hadoop-0.20.2 hadoop

Please note if you want to grant access for another hadoop admin user (e.g. hduser2), you have to grant read permission to folder /home/hduser using the following command:

sudo chown -R hduser2:hadoop hadoop

Update $HOME/.bashrc

You will need to update the .bachrc for hduser (and for every user you need to administer Hadoop). To open .bachrc file, you will need to open it as root:

$sudo gedit /home/hduser/.bashrc

Then you will add the following configurations at the end of .bachrc file

# Set Hadoop-# related environment variables

export HADOOP_HOME=/home/hduser/hadoop

# Set JAVA_HOME (we will also configure JAVA_HOME directly for Hadoop later on)

export JAVA_HOME=/usr/lib/jvm/java-6-sun

# or you can write the following command if you used this post to install your java

# export JAVA_HOME=/usr/lib/jvm/jdk1.7.0_71

# or you can write the following command if you used this post to install your java

# export JAVA_HOME=/usr/lib/jvm/jdk1.7.0_71

# Some convenient aliases and functions for running Hadoop-related commands

unalias fs &> /dev/null

alias fs="hadoop fs"

unalias hls &> /dev/null

alias hls="fs -ls"

# If you have LZO compression enabled in your Hadoop cluster and

# compress job outputs with LZOP (not covered in this tutorial):

# Conveniently inspect an LZOP compressed file from the command

# line; run via:

#

# $ lzohead /hdfs/path/to/lzop/compressed/file.lzo

#

# Requires installed 'lzop' command.

#

lzohead () {

hadoop fs -cat $1 | lzop -dc | head -1000 | less

}

# Add Hadoop bin/ directory to PATH

export PATH=$PATH:$HADOOP_HOME/bin

Hadoop Configuration

Now, we need to configure Hadoop framework on Ubuntu machine. The following are configuration files we can use to do the proper configuration. To know more about hadoop configurations, you can visit this site

hadoop-env.sh

We need only to update the JAVA_HOME variable in this file. Simply you will open this file using a text editor using the following command:

$sudo gedit /home/hduser/hadoop/conf/hadoop-env.sh

Then you will need to change the following line

# export JAVA_HOME=/usr/lib/j2sdk1.5-sun

To

export JAVA_HOME=/usr/lib/jvm/java-6-sun

or you can write the following command if you used this post to install your java

# export JAVA_HOME=/usr/lib/jvm/jdk1.7.0_71

or you can write the following command if you used this post to install your java

# export JAVA_HOME=/usr/lib/jvm/jdk1.7.0_71

Note: if you faced "Error: JAVA_HOME is not set" Error while starting the services, then you seems that you forgot toe uncomment the previous line (just remove #).

core-site.xml

First, we need to create a temp directory for Hadoop framework. If you need this environment for testing or a quick prototype (e.g. develop simple hadoop programs for your personal test ...), I suggest to create this folder under /home/hduser/ directory, otherwise, you should create this folder in a shared place under shared folder (like /usr/local ...) but you may face some security issues. But to overcome the exceptions that may caused by security (like java.io.IOException), I have created the tmp folder under hduser space.

To create this folder, type the following command:

$ sudo mkdir /home/hduser/tmp

Please note that if you want to make another admin user (e.g. hduser2 in hadoop group), you should grant him a read and write permission on this folder using the following commands:

$ sudo chown hduser2:hadoop /home/hduser/tmp

$ sudo chmod 755 /home/hduser/tmp

Now, we can open hadoop/conf/core-site.xml to edit the hadoop.tmp.dir entry.

We can open the core-site.xml using text editor:

$sudo gedit /home/hduser/hadoop/conf/core-site.xml

Then add the following configurations between <configuration> .. </configuration> xml elements:

<!-- In: conf/core-site.xml -->

<property>

<name>hadoop.tmp.dir</name>

<value>/home/hduser/tmp</value>

<description>A base for other temporary directories.</description>

</property>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:54310</value>

<description>The name of the default file system. A URI whose

scheme and authority determine the FileSystem implementation. The

uri's scheme determines the config property (fs.SCHEME.impl) naming

the FileSystem implementation class. The uri's authority is used to

determine the host, port, etc. for a filesystem.</description>

</property>

mapred-site.xml

We will open the hadoop/conf/mapred-site.xml using a text editor and add the following configuration values (like core-site.xml)

<!-- In: conf/mapred-site.xml -->

<property>

<name>mapred.job.tracker</name>

<value>localhost:54311</value>

<description>The host and port that the MapReduce job tracker runs

at. If "local", then jobs are run in-process as a single map

and reduce task.

</description>

</property>

hdfs-site.xml

Open hadoop/conf/hdfs-site.xml using a text editor and add the following configurations:

<!-- In: conf/hdfs-site.xml -->

<property>

<name>dfs.replication</name>

<value>1</value>

<description>Default block replication.

The actual number of replications can be specified when the file is created.

The default is used if replication is not specified in create time.

</description>

</property>

Formatting NameNode

You should format the NameNode in your HDFS. You should not do this step when the system is running. It is usually done once at first time of your installation.

Run the following command

$/home/hduser/hadoop/bin/hadoop namenode -format

|

| NameNode Formatting |

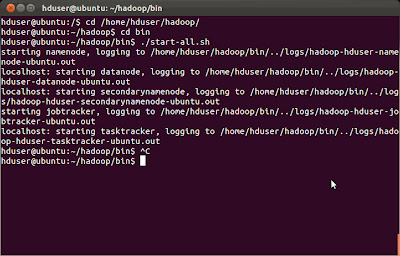

Starting Hadoop Cluster

You will need to navigate to hadoop/bin directory and run ./start-all.sh script.

|

| Starting Hadoop Services using ./start-all.sh |

There is a nice tool called jps. You can use it to ensure that all the services are up.

|

| Using jps tool |

Running an Example (Pi Example)

There are many built-in examples. We can run PI estimator example using the following command:

hduser@ubuntu:~/hadoop/bin$ hadoop jar ../hadoop-0.20.2-examples.jar pi 3 10

If you faced "Incompatible namespaceIDs" Exception you can do the following:

1. Stop all the services (by calling ./stop-all.sh).

2. Delete

/tmp/hadoop/dfs/data/*

3. Start all the services.

![[Solved] Problem](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEje20iJAPIbIX1PZULp-EXgepO-GVsevfCugvBRpCJdV2y274BxlPRKfHM_ZzTlJpaYOk4Y9tI8Fi6qpq0qK478VAZygGgvpuTeruwbWqeehDMampdRoQozmfGmQVZq2-HR5i1IkHt5kpwT/s760/Solved.jpg)

![[Solved] Problem Hadoop Installation Ubuntu Single Node Disable IP V6 Disable IP V6](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhYCVPBzrt6hFY5WylwL0Fh_7dVug2_k_Y8GFPfB6Zb0gDDlpdLyl3TP8BQfpXasD727j359OYuC5z_sMYZsfldNElQlsBasWx14s6WA-qjhKXlFLWCKxlX4K5jbzi8xTGPJpmRl5n4fz9A/s400/Disable+IP+V6.jpg)

![[Solved] Problem Hadoop Installation Ubuntu Single Node Download Hadoop Download Hadoop](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEgL_USKV1mtsexX_R6rITh8J25A9ykH3cxpMsW7VbNSkma8-wrrT5oaSM55feu0hx5Wu7vUYs-1SoRmJL3I0jHfjli7rXAaDHRUoaS_c7INz2HxGxVUpfydE9fEQtSRMW4h56yuOkrMjUQS/s400/Download+Hadoop.jpg)

Java environment: Hadoop 0.20.2 works well also with OpenJDK.

ReplyDeleteThanks Lukee for your fruitful comment :) I will try setup it in the future using OpenJDK instead.

ReplyDeleteReally Nice explanation.... Thanks Yahia

ReplyDeletewhen i tried this cmd ./start-all.sh script i am getting the following error

ReplyDelete.

.

.

hduser@ubuntu:~/hadoop/bin$ ./start-all.sh script

starting namenode, logging to /home/hduser/hadoop/bin/../logs/hadoop-hduser-namenode-ubuntu.out

/home/hduser/hadoop/bin/hadoop-daemon.sh: line 117: /home/hduser/hadoop/bin/../logs/hadoop-hduser-namenode-ubuntu.out: Permission denied

head: cannot open `/home/hduser/hadoop/bin/../logs/hadoop-hduser-namenode-ubuntu.out' for reading: No such file or directory

hduser@localhost's password:

hduser@localhost's password: localhost: Permission denied, please try again.

hduser@localhost's password: localhost: Permission denied, please try again.

plzzzz help me out

Hi dheeraj,

Deleterun with root privileage

Hi dheeraj

ReplyDeleteit seems that you have setup the hadoop working directory out of hduser permissions, try to grant read/write permissions to hduser on the working directory by running the following command:

sudo chmod -r 7777 /home/hduser/hadoop

Thanks tirumurugan for your comment :)

ReplyDeleteHi,

ReplyDeleteI had installed hadoop stable version successfully. but confused while installing hadoop -2.0.0 version.

I want to install hadoop-2.0.0-alpha on two nodes, using federation on both machines. "rsi-1", 'rsi-2" are hostnames.

what should be values of below properties for implementation of federation. Both machines are also used for datanodes too.

fs.defaulFS

dfs.federation.nameservices

dfs.namenode.name.dir

dfs.datanode.data.dir

yarn.nodemanager.localizer.address

yarn.resourcemanager.resource-tracker.address

yarn.resourcemanager.scheduler.address

yarn.resourcemanager.address

One more point, in stable version of hadoop i have configuration files under conf folder in installation directory.

But in 2.0.0-aplha version, there is etc/hadoop directory and it doesnt have mapred-site.xml, hadoop-env.sh. do i need to copy conf folder under share folder into hadoop-home directory? or do i need to copy these files from share folder into etc/hadoop directory?

Regards,

Rashmi

Hi Rashmi

ReplyDeleteI didn't try installing Hadoop alpha 2.0.0 version, I will may try to install it and publish a new post.

This comment has been removed by the author.

ReplyDeleteThis comment has been removed by the author.

ReplyDeleteHi,

DeleteI am very new to Hadoop and Ubuntu,Could you please suggest me a document which enables me.

I am working as a java developer, Here my role is to import files from, export files to HDFS in java and simultaniously I have to run .sh files which can run the R-scripts to perform map-reduce...

any help would be greatly appreciated.

thanks in advance.

thanks, for single node it is working fine,

ReplyDeleteplz give some tutorial for multi-node setup hadoop.

Hi Anju, I will try to post about multi-node hadoop setup soon, and thanks a lot for your comment :)

ReplyDeleteTo solve Incompatible namespaceIDs, here is alternative approach:

ReplyDelete1) First stop the Hadoop service by ./stop-all.sh in Hadoop master.

2) Go to the following directory of all slave nodes: /data-store/dfs/data/current/, where /data-store/dfs/data/ is your data directory which you have set in /conf/hdfs-site.xml for the dfs.data.dir property.

3) Edit the VERSION file: vi VERSION

4) From the logs shown above: copy the namenode namespaceID, and paste it for namespaceID key in VERSION file of the slave.

5) Do this in all the slave nodes.

6) Start the Hadoop, ./start-all.sh in Hadoop master.

7) Then go to the slaves and check datanode service.

What if instead of setting up Hadoop like this we use Cloudera distribution?

ReplyDeleteI experienced a different error n i have no clue how to slove it..

ReplyDeletehduser@ubuntu:~/hadoop/bin$ ./start-all.sh

starting namenode, logging to /home/hduser/hadoop/bin/../logs/hadoop-hduser-namenode-ubuntu.out

Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/hadoop/util/PlatformName

Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.util.PlatformName

at java.net.URLClassLoader$1.run(URLClassLoader.java:202)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:190)

at java.lang.ClassLoader.loadClass(ClassLoader.java:306)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:301)

at java.lang.ClassLoader.loadClass(ClassLoader.java:247)

Could not find the main class: org.apache.hadoop.util.PlatformName. Program will exit.

Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/hadoop/hdfs/server/namenode/NameNode

`

`

`

`

`

localhost: at java.security.AccessController.doPrivileged(Native Method)

localhost: at java.net.URLClassLoader.findClass(URLClassLoader.java:190)

localhost: at java.lang.ClassLoader.loadClass(ClassLoader.java:306)

localhost: at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:301)

localhost: at java.lang.ClassLoader.loadClass(ClassLoader.java:247)

localhost: Could not find the main class: org.apache.hadoop.util.PlatformName. Program will exit.

localhost: Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/hadoop/mapred/TaskTracker

Hi Farhat Khan,

ReplyDeleteIt seems that your Hadoop Jar is not in the correct class path, make sure that you have updated $HOME/.bashrc file with the correct paths and let me know.

Hi Yahia,

DeleteUr suggestion helped and i have made the corrections in .bashrc. :-)

In the next step to execute a sample example i came across with a different error and i really appreciate if u cud help.

hduser@ubuntu:~/hadoop/bin$ hadoop jar hadoop-0.20.2-examples.jar pi

Exception in thread "main" java.io.IOException: Error opening job jar: hadoop-0.20.2-examples.jar

at org.apache.hadoop.util.RunJar.main(RunJar.java:90)

Caused by: java.util.zip.ZipException: error in opening zip file

at java.util.zip.ZipFile.open(Native Method)

at java.util.zip.ZipFile.(ZipFile.java:127)

at java.util.jar.JarFile.(JarFile.java:135)

at java.util.jar.JarFile.(JarFile.java:72)

at org.apache.hadoop.util.RunJar.main(RunJar.java:88)

Hi Farhat

DeleteGood news :)

About the jar, may be the jar file is corrupted, can you please download it again and tell me the results.

i also found a suggestion in a forum that may be the permissions to hadoop directory can also b the reason for this. Do u have any idea that what it is exactly.

DeleteI also tried to look at the permissions to the hadoop directory n i have found this:

drwxrwxrwx 13 farhatkhan hadoop 4096 Oct 17 12:19 hadoop

any help?

i have the same problem ^ any fixes?

DeleteHi Haseeb,

ReplyDeleteYes, we can use Cloudera distribution for installing hadoop. But many Hadoop developers recommend to install it manually to know all the configurations and the options behind the scene. If you know the advanced options and configurations, you can directly install hadoop using Cloudera distribution.

And thanks for your comment :)

Hello Yahia

ReplyDeleteThanks for your kind support.

I have a problem and that is when I run (./start-all.sh)command,it is requiring a password for my private key.so please suggest me what should be password.

Thanks again..

Hi Xeat,

ReplyDeleteYou welcome anytime :)

I think you should generate your SSH key for hduser using the following command

$su - hduser

$ssh-keygen -t rsa -P ""

you can replace hduser with any other user you want to administer the hadoop environment.

Thanks Yahia.. Good Information !!

ReplyDeleteUnfortunately I kept a password for hduser. So when ever I am starting hadoop, I am asked for the password for each service. As below. Though hadoop started successfully. I want to remove the password for hduser. Can you help me here.

hduser@tcs-VirtualBox:~/hadoop-0.20.2/bin$ ./start-all.sh

starting namenode, logging to /home/hduser/hadoop-0.20.2/bin/../logs/hadoop-hduser-namenode-tcs-VirtualBox.out

hduser@localhost's password:

localhost: starting datanode, logging to /home/hduser/hadoop-0.20.2/bin/../logs/hadoop-hduser-datanode-tcs-VirtualBox.out

hduser@localhost's password:

localhost: starting secondarynamenode, logging to /home/hduser/hadoop-0.20.2/bin/../logs/hadoop-hduser-secondarynamenode-tcs-VirtualBox.out

starting jobtracker, logging to /home/hduser/hadoop-0.20.2/bin/../logs/hadoop-hduser-jobtracker-tcs-VirtualBox.out

hduser@localhost's password:

Hi Neha,

DeleteYou welcome :)

Did you try the chmod command on the hadoop folder ?

tried it following the steps given in the blog http://mysolvedproblem.blogspot.in/2012/05/installing-hadoop-on-ubuntu-linux-on.html. But I could not get it done. I have downloaded hadoop 0.22.0 instead of 0.20.2 but I modified the commands as well

ReplyDeleteFor generating the hadoop key to the hduser(dedicated hadoop user).

What does it mean by key?

$sudo gedit /home/hduser/.bashrc

This command is saying that there is no such file.

Could anyone help me with this?

Hi Manjush

DeleteGenerating SSH key is important step for connecting to hadoop server using ssh.

About .bashrc file, it must be existed for each user created. I think it is hidden file, but using the command, it should be opened.

Thanks for the tutorial, everything went fine but I skipped ssh-keygen part as I was having problems with it that I couldn't solve. It worked without it and with password authentication when I tried the wordcount example but I failed to view localhost:54310 , localhost:54311 ..etc to see the NameNode, jobTracker .etc. Is there a relation between this and ssh rsa key??

ReplyDeleteto see namenode localhost:50070

Deletethanks .. 54310, 54311 were the ones used by cloudera

Deletethanks eng:Yahia but i have permission problem at the step of formatting namenode

ReplyDeletebash: bin/hadoop: Permission denied

i have tried ubuntu 12.04 $ 12.10 and the same error exist !! any idea ?

Ahmed, Did you try to generate ssh key ? and thanks for your visit :)

Deletethanks dr:yahia

Deletei am new TA @ FCI-CU and just started learning about hadoop

i solved it :)

it was missing execute permission for files under directory bin/hadoop

Hi Ahmed,

DeleteGreat :) Hope to see you soon.

when I run ./startup.sh I get folowing error plese help me...............

DeleteThis script is Deprecated. Instead use start-dfs.sh and start-mapred.sh

Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/hadoop/util/PlatformName

Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.util.PlatformName

at java.net.URLClassLoader$1.run(URLClassLoader.java:202)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:190)

at java.lang.ClassLoader.loadClass(ClassLoader.java:306)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:301)

at java.lang.ClassLoader.loadClass(ClassLoader.java:247)

Could not find the main class: org.apache.hadoop.util.PlatformName. Program will exit.

Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/hadoop/util/PlatformName

Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.util.PlatformName

at java.net.URLClassLoader$1.run(URLClassLoader.java:202)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:190)

at java.lang.ClassLoader.loadClass(ClassLoader.java:306)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:301)

at java.lang.ClassLoader.loadClass(ClassLoader.java:247)

Could not find the main class: org.apache.hadoop.util.PlatformName. Program will exit.

Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/hadoop/util/PlatformName

Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.util.PlatformName

at java.net.URLClassLoader$1.run(URLClassLoader.java:202)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:190)

at java.lang.ClassLoader.loadClass(ClassLoader.java:306)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:301)

at java.lang.ClassLoader.loadClass(ClassLoader.java:247)

Could not find the main class: org.apache.hadoop.util.PlatformName. Program will exit.

chown: changing ownership of `/home/mtech11/hadoop/bin/../logs': Operation not permitted

starting namenode, logging to /home/mtech11/hadoop/bin/../logs/hadoop-mtech11-namenode-cse-desktop.out

/home/mtech11/hadoop/bin/../bin/hadoop-daemon.sh: line 136: /home/mtech11/hadoop/bin/../logs/hadoop-mtech11-namenode-cse-desktop.out: Permission denied

head: cannot open `/home/mtech11/hadoop/bin/../logs/hadoop-mtech11-namenode-cse-desktop.out' for reading: No such file or directory

Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/hadoop/util/PlatformName

Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.util.PlatformName

at java.net.URLClassLoader$1.run(URLClassLoader.java:202)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:190)

at java.lang.ClassLoader.loadClass(ClassLoader.java:306)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:301)

at java.lang.ClassLoader.loadClass(ClassLoader.java:247)

Could not find the main class: org.apache.hadoop.util.PlatformName. Program will exit.

Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/hadoop/util/PlatformName

Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.util.PlatformName

at java.net.URLClassLoader$1.run(URLClassLoader.java:202)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:190)

at java.lang.ClassLoader.loadClass(ClassLoader.java:306)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:301)

at java.lang.ClassLoader.loadClass(ClassLoader.java:247)

Could not find the main class: org.apache.hadoop.util.PlatformName. Program will exit.

Prathibha.P.G

I have another doubt, my jobtracker and tasktracker is not running.

ReplyDeleteKindly help as soon as possible

Hi

Deleteare you sure from hadoop versions are correct ?

hi i installed and configured hadoop as mentioned above. I am getting following error while "namenode" formatting command.

ReplyDeletesandeep@sandeep-Inspiron-N5010:~/Downloads$ hadoop/bin/hadoop namenode -format

Warning: $HADOOP_HOME is deprecated.

13/01/14 10:09:48 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = sandeep-Inspiron-N5010/127.0.1.1

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 1.0.4

STARTUP_MSG: build = https://svn.apache.org/repos/asf/hadoop/common/branches/branch-1.0 -r 1393290; compiled by 'hortonfo' on Wed Oct 3 05:13:58 UTC 2012

************************************************************/

13/01/14 10:09:48 INFO util.GSet: VM type = 32-bit

13/01/14 10:09:48 INFO util.GSet: 2% max memory = 17.77875 MB

13/01/14 10:09:48 INFO util.GSet: capacity = 2^22 = 4194304 entries

13/01/14 10:09:48 INFO util.GSet: recommended=4194304, actual=4194304

13/01/14 10:09:49 INFO namenode.FSNamesystem: fsOwner=sandeep

13/01/14 10:09:49 INFO namenode.FSNamesystem: supergroup=supergroup

13/01/14 10:09:49 INFO namenode.FSNamesystem: isPermissionEnabled=true

13/01/14 10:09:49 INFO namenode.FSNamesystem: dfs.block.invalidate.limit=100

13/01/14 10:09:49 INFO namenode.FSNamesystem: isAccessTokenEnabled=false accessKeyUpdateInterval=0 min(s), accessTokenLifetime=0 min(s)

13/01/14 10:09:49 INFO namenode.NameNode: Caching file names occuring more than 10 times

13/01/14 10:09:49 ERROR namenode.NameNode: java.io.IOException: Cannot create directory /home/hduser/tmp/dfs/name/current

at org.apache.hadoop.hdfs.server.common.Storage$StorageDirectory.clearDirectory(Storage.java:297)

at org.apache.hadoop.hdfs.server.namenode.FSImage.format(FSImage.java:1320)

at org.apache.hadoop.hdfs.server.namenode.FSImage.format(FSImage.java:1339)

at org.apache.hadoop.hdfs.server.namenode.NameNode.format(NameNode.java:1164)

at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1271)

at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1288)

13/01/14 10:09:49 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at sandeep-Inspiron-N5010/127.0.1.1

************************************************************/

my hadoop ver is hadoop-1.0.4.

as i selected my version according to micheall noll blog.

however, let me know if the above issue is concern with version.

and should i select hadoop-0.22.0

TRY THIS SITE using your Hadoop version....

Deletehttp://www.michael-noll.com/tutorials/running-hadoop-on-ubuntu-linux-single-node-cluster/

Hi Sandeep Dange

ReplyDeleteAre you sure that you have given the permissions to hduser (on your hadoop installation directory) ?

hi Yahia Zakaria,

ReplyDeleteWhen i tried to format namenode i am getting the below error.

hduser@ubuntu:~/usr/local/hadoop$ bin/hadoop namenode -format

Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/hadoop/hdfs/server/namenode/NameNode

Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.hdfs.server.namenode.NameNode

at java.net.URLClassLoader$1.run(URLClassLoader.java:202)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:190)

at java.lang.ClassLoader.loadClass(ClassLoader.java:307)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:301)

at java.lang.ClassLoader.loadClass(ClassLoader.java:248)

Could not find the main class: org.apache.hadoop.hdfs.server.namenode.NameNode. Program will exit.

When i checked hadoop-core.jar , it had NameNode.class file. What should i do now.?Any suggestions? Thanks in advance!

Hi Rajasri,

DeleteYou can try getting more information by checking the logs.

Check for errors in /var/log/hadoop-0.20 for each daemon

check all ports are opened using $netstat -ptlen

Thanks

if i forget the super user password in hadoop how to retrieve that password.And iam using Ubuntu-12.04-desktop-i386

ReplyDeletehi we have created one user name and password and key also generated after that we are disabling the IPV6 by typing this command

ReplyDelete$sudo gedit /etc/sysctl.conf

but we are getting error has user don't have sudoers file.this incident will be reported.

Please help us..........

have same problem. somebody help here. i opened a root user with a command "sudo passwd root" and even so i get an error that says:"Cannot open display:

DeleteRun 'gedit --help' to see a full list of available command line options."

Hi yahia,

ReplyDeleteI am creating a single node hadoop setup in feodra machine.I followed your blog and formatted the name node and got he output like this.

namenode report:

STARTUP_MSG: build = https://svn.apache.org/repos/asf/hadoop/common/branches/b

ranch-1.0 -r 1393290;

compiled by 'hortonfo' on Wed Oct 3 05:13:58 UTC 2012

************************************************************/

13/02/01 14:48:38 INFO util.GSet: VM type = 64-bit

13/02/01 14:48:38 INFO util.GSet: 2% max memory = 17.77875 MB

13/02/01 14:48:38 INFO util.GSet: capacity = 2^21 = 2097152 entries

13/02/01 14:48:38 INFO util.GSet: recommended=2097152, actual=2097152

13/02/01 14:48:39 INFO namenode.FSNamesystem: fsOwner=hduser

13/02/01 14:48:39 INFO namenode.FSNamesystem: supergroup=supergroup

13/02/01 14:48:39 INFO namenode.FSNamesystem: isPermissionEnabled=true

13/02/01 14:48:39 INFO namenode.FSNamesystem: dfs.block.invalidate.limit=100

13/02/01 14:48:39 INFO namenode.FSNamesystem: isAccessTokenEnabled=false accessK

eyUpdateInterval=0 min(s),

accessTokenLifetime=0 min(s)

13/02/01 14:48:39 INFO namenode.NameNode: Caching file names occuring more than

10 times

13/02/01 14:48:39 INFO common.Storage: Image file of size 112 saved in 0 seconds

.

13/02/01 14:48:39 INFO common.Storage: Storage directory /home/hduser/tmp/dfs/na me has been successfully

formatted.

13/02/01 14:48:39 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at localhost.localdomain/127.0.0.1

************************************************************/

[hduser@localhost ~]$

but when I try to start all the .sh I am getting this error

./start-all.sh

mkdir: cannot create directory â/home/hduser/hadoop/libexec/../logsâ: Permission denied

chown: cannot access â/home/hduser/hadoop/libexec/../logsâ: No such file or directory

starting namenode, logging to /home/hduser/hadoop/libexec/../logs/hadoop-hduser-namenode-localhost.localdomain.out

/home/hduser/hadoop/bin/hadoop-daemon.sh: line 135: /home/hduser/hadoop/libexec/../logs/hadoop-hduser-namenode-localhost.localdomain.out: No such file or directory

head: cannot open â/home/hduser/hadoop/libexec/../logs/hadoop-hduser-namenode-localhost.localdomain.outâ for reading: No such file or directory

hduser@localhost's password:

localhost: mkdir: cannot create directory â/home/hduser/hadoop/libexec/../logsâ: Permission denied

kindly help on this ...thanks in advance

Are you sure that you are logged in using hduser ? Actually you have two options:

DeleteEither 1. run all hadoop commands using sudo user

Or 2. give permissions on the hadoop folder to your user (using chmod command).

hi,

ReplyDeletemy datanode is not running when I give jps command.Kindly help me.Iam using hadoop 0.22.0 and ubuntu 10.04

hi

Deleteyou can try to start dfs service alone and please send me the logs you have.

HI all,

ReplyDeleteI am setuping haddop on fedora linux.I manged to start all my namenode,datanode and jobtracker services running.

[hduser@localhost bin]$ ./start-all.sh

starting namenode, logging to /home/hduser/hadoop/libexec/../logs/hadoop-hduser-namenode-

localhost.localdomain.out

hduser@localhost's password:

localhost: starting datanode, logging to /home/hduser/hadoop/libexec/../logs/hadoop-hduser-

datanode-localhost.localdomain.out

hduser@localhost's password:

localhost: starting secondarynamenode, logging to /home/hduser/hadoop/libexec/../logs/hadoop-

hduser-secondarynamenode-localhost.localdomain.out

starting jobtracker, logging to /home/hduser/hadoop/libexec/../logs/hadoop-hduser-jobtracker-

localhost.localdomain.out

hduser@localhost's password:

localhost: starting tasktracker, logging to /home/hduser/hadoop/libexec/../logs/hadoop-hduser-

tasktracker-localhost.localdomain.out

[hduser@localhost bin]$

when i try to run a sample job..i am getting this error

bash: hadoop: command not found...

kindly help....Thanks

Hi Arun

ReplyDeleteCan you please send the command you are using to run the sample job ?

thanks

Hi Yahia,

ReplyDeletethanks for the reply.

I managed to run the sample job in my single node setup and it was working fine.

Can you help me in setup a multinode cluster setup.this is the command I used "[hduser@localhost bin]$ ./hadoop jar /home/hduser/hadoop/hadoop-examples-1.0.4.jar grep input output 'dfs\[a-z.]+'" it works fine.

I also want to check with u,for running this sample job i created the input directory using this command "hadoop fs -mkdir input"

where this input directory be created.

I tried creating the multinode setup. Using the VHD disk I configured for single node setup I created two more VM and edited the master and slave file located at hadoop/conf and tried a sample job wordcount from master node. the job ran successfully but I am not sure whether the slave node was utilized.how can I make sure that all the nodes are utilized.kindly help awaiting for the reply.

Thanks,

Arun

I am having same problem in single node how did you fix it?

DeleteHi all,

ReplyDeleteI have created a haddop cluster with one master and two slave nodes.

STARTUP_MSG: Starting DataNode

STARTUP_MSG: host = slave1/10.11.240.149

STARTUP_MSG: args = []

STARTUP_MSG: version = 1.0.4

STARTUP_MSG: build = https://svn.apache.org/repos/asf/hadoop/common/branches/branch-1.0 -r 1393290; compiled by 'hortonfo' on Wed Oct 3 05:13:58 UTC 2012

************************************************************/

2013-02-06 13:15:57,923 INFO org.apache.hadoop.metrics2.impl.MetricsConfig: loaded properties from hadoop-metrics2.properties

2013-02-06 13:15:57,942 INFO org.apache.hadoop.metrics2.impl.MetricsSourceAdapter: MBean for source MetricsSystem,sub=Stats registered.

2013-02-06 13:15:57,945 INFO org.apache.hadoop.metrics2.impl.MetricsSystemImpl: Scheduled snapshot period at 10 second(s).

2013-02-06 13:15:57,945 INFO org.apache.hadoop.metrics2.impl.MetricsSystemImpl: DataNode metrics system started

2013-02-06 13:15:58,077 INFO org.apache.hadoop.metrics2.impl.MetricsSourceAdapter: MBean for source ugi registered.

2013-02-06 13:15:59,347 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: master/10.11.240.148:54310. Already tried 0 time(s).

2013-02-06 13:16:00,351 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: master/10.11.240.148:54310. Already tried 1 time(s).

2013-02-06 13:16:01,356 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: master/10.11.240.148:54310. Already tried 2 time(s).

2013-02-06 13:16:02,360 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: master/10.11.240.148:54310. Already tried 3 time(s).

2013-02-06 13:16:03,365 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: master/10.11.240.148:54310. Already tried 4 time(s).

2013-02-06 13:16:04,367 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: master/10.11.240.148:54310. Already tried 5 time(s).

2013-02-06 13:16:05,371 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: master/10.11.240.148:54310. Already tried 6 time(s).

2013-02-06 13:16:06,373 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: master/10.11.240.148:54310. Already tried 7 time(s).

2013-02-06 13:16:07,377 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: master/10.11.240.148:54310. Already tried 8 time(s).

2013-02-06 13:16:08,379 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: master/10.11.240.148:54310. Already tried 9 time(s).

2013-02-06 13:16:15,485 ERROR org.apache.hadoop.hdfs.server.datanode.DataNode: java.io.IOException: Call to master/10.11.240.148:54310 failed on local exception: java.net.NoRouteToHostException: No route to host

at org.apache.hadoop.ipc.Client.wrapException(Client.java:1107)

at org.apache.hadoop.ipc.Client.call(Client.java:1075)

at org.apache.hadoop.ipc.RPC$Invoker.invoke(RPC.java:225)

at $Proxy5.getProtocolVersion(Unknown Source)

at org.apache.hadoop.ipc.RPC.getProxy(RPC.java:396)

at org.apache.hadoop.ipc.RPC.getProxy(RPC.java:370)

at org.apache.hadoop.ipc.RPC.getProxy(RPC.java:429)

please help

thanks,

Arun

Try to ping the slaves from the master nodes and tell me the result.

DeleteHi yahia, I can able to ping the master from slave.

DeleteHi Arnu,

DeleteCan you check the hadoop services are up on the master and slaves ? also you can telnet each machine to check the open ports ? the 54310 and 54311 ports should be open. You can use nmap tool to check the open ports.

This comment has been removed by the author.

DeleteHi Yahia,

DeleteThanks for the reply i will check it.

I also created a hadoop cluster with 1 master and 2slave nodes using cloudera manager with the help of this link"http://www.youtube.com/watch?v=CobVqNMiqww".in this case when i tried to run a sample job it throws the error.I cant fidn the reason for this can you help me on this please.

[root@n1 bin]# ./hadoop jar /usr/lib/hadoop/hadoop-examples-1.0.4.jar grep input output 'dfs\[a-z.]+'

java.lang.NoSuchMethodError: org.apache.hadoop.util.ProgramDriver.driver([Ljava/lang/String;)V

at org.apache.hadoop.examples.ExampleDriver.main(ExampleDriver.java:64)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:39)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:25)

at java.lang.reflect.Method.invoke(Method.java:597)

at org.apache.hadoop.util.RunJar.main(RunJar.java:208)

Thanks,

Arun

when i submit file(input.txt/input tried both) which is input for wordcount then it gives msg like---

ReplyDeletehduser@slave:/usr/local/hadoop$ bin/hadoop dfs -copyFromLocal /tmp/input/ /user/input/

copyFromLocal: Target /user/input/input is a directory

Try to copy the file itself, not all the directory. I mean hadoop dfs -copyFromLocal /tmp/input/input.txt /user/input/

DeleteThis comment has been removed by the author.

Deletethnks yahia Zakaria it works but i hav new problem which is given below same problem with word count program..i think i miss any step while installing of hadoop

Deletewhen i tried these one

ReplyDeletehduser@slave:/usr/local/hadoop$ bin/hadoop jar hadoop-examples-1.0.4.jar pi 3 10

Number of Maps = 3

Samples per Map = 10

Wrote input for Map #0

Wrote input for Map #1

Wrote input for Map #2

Starting Job

13/02/08 02:56:50 INFO mapred.FileInputFormat: Total input paths to process : 3

13/02/08 02:56:51 INFO mapred.JobClient: Running job: job_201302080254_0002

13/02/08 02:56:52 INFO mapred.JobClient: map 0% reduce 0%

13/02/08 02:56:55 INFO mapred.JobClient: Task Id : attempt_201302080254_0002_m_000004_0, Status : FAILED

Error initializing attempt_201302080254_0002_m_000004_0:

java.io.IOException: Exception reading file:/dfs/name/current/mapred/local/ttprivate/taskTracker/hduser/jobcache/job_201302080254_0002/jobToken

at org.apache.hadoop.security.Credentials.readTokenStorageFile(Credentials.java:135)

at org.apache.hadoop.mapreduce.security.TokenCache.loadTokens(TokenCache.java:165)

at org.apache.hadoop.mapred.TaskTracker.initializeJob(TaskTracker.java:1181)

at org.apache.hadoop.mapred.TaskTracker.localizeJob(TaskTracker.java:1118)

at org.apache.hadoop.mapred.TaskTracker$5.run(TaskTracker.java:2430)

at java.lang.Thread.run(Thread.java:722)

Caused by: java.io.FileNotFoundException: File file:/dfs/name/current/mapred/local/ttprivate/taskTracker/hduser/jobcache/job_201302080254_0002/jobToken does not exist.

at org.apache.hadoop.fs.RawLocalFileSystem.getFileStatus(RawLocalFileSystem.java:397)

at org.apache.hadoop.fs.FilterFileSystem.getFileStatus(FilterFileSystem.java:251)

at org.apache.hadoop.fs.ChecksumFileSystem$ChecksumFSInputChecker.(ChecksumFileSystem.java:125)

at org.apache.hadoop.fs.ChecksumFileSystem.open(ChecksumFileSystem.java:283)

at org.apache.hadoop.fs.FileSystem.open(FileSystem.java:427)

at org.apache.hadoop.security.Credentials.readTokenStorageFile(Credentials.java:129)

... 5 more

13/02/08 02:56:55 WARN mapred.JobClient: Error reading task outputhttp://localhost:50060/tasklog?plaintext=true&attemptid=attempt_201302080254_0002_m_000004_0&filter=stdout

13/02/08 02:56:55 WARN mapred.JobClient: Error reading task outputhttp://localhost:50060/tasklog?plaintext=true&attemptid=attempt_201302080254_0002_m_000004_0&filter=stderr

13/02/08 02:56:58 INFO mapred.JobClient: Task Id : attempt_201302080254_0002_m_000004_1, Status : FAILED

Error initializing attempt_201302080254_0002_m_000004_1:

java.io.IOException: Exception reading file:/dfs/name/current/mapred/local/ttprivate/taskTracker/hduser/jobcache/job_201302080254_0002/jobToken

at org.apache.hadoop.security.Credentials.readTokenStorageFile(Credentials.java:135)

at org.apache.hadoop.mapreduce.security.TokenCache.loadTokens(TokenCache.java:165)

at org.apache.hadoop.mapred.TaskTracker.initializeJob(TaskTracker.java:1181)

at org.apache.hadoop.mapred.TaskTracker.localizeJob(TaskTracker.java:1118)

at org.apache.hadoop.mapred.TaskTracker$5.run(TaskTracker.java:2430)

at java.lang.Thread.run(Thread.java:722)

Caused by: java.io.FileNotFoundException: File file:/dfs/name/current/mapred/local/ttprivate/taskTracker/hduser/jobcache/job_201302080254_0002/jobToken does not exist.

at org.apache.hadoop.fs.RawLocalFileSystem.getFileStatus(RawLocalFileSystem.java:397)

at org.apache.hadoop.fs.FilterFileSystem.getFileStatus(FilterFileSystem.java:251)

at org.apache.hadoop.fs.ChecksumFileSystem$ChecksumFSInputChecker.(ChecksumFileSystem.java:125)

at org.apache.hadoop.fs.ChecksumFileSystem.open(ChecksumFileSystem.java:283)

at org.apache.hadoop.fs.FileSystem.open(FileSystem.java:427)

at org.apache.hadoop.security.Credentials.readTokenStorageFile(Credentials.java:129)

... 5 more

try to run using the sudoer user. I mean try to put sudo before your command.

DeleteDinesh: hduser@ubuntu:~$ ssh-keygen -t rsa -P ""

ReplyDeleteGenerating public/private rsa key pair.

Enter file in which to save the key (/home/hduser/.ssh/id_rsa):

/home/hduser/.ssh/id_rsa already exists.

Overwrite (y/n)? y

Your identification has been saved in /home/hduser/.ssh/id_rsa.

Your public key has been saved in /home/hduser/.ssh/id_rsa.pub.

The key fingerprint is:

28:63:49:ab:75:1c:78:e3:7c:c7:f5:b5:79:9c:f7:11 hduser@ubuntu

The key's randomart image is:

+--[ RSA 2048]----+

| |

| . |

| o + . E.|

| . B + . . . o=|

| B * S o .=+|

| + + . . =|

| . .|

| |

| |

+-----------------+

hduser@ubuntu:~$ cat $home/.ssh/id_rsa.pub>> $home/.ssh/authorized_keys

-su: /.ssh/authorized_keys: No such file or directory

see when am running ssh-keygen -t rsa -P "" it asking plz enter file name, what should i need to enter??

cat $home/.ssh/id_rsa.pub>> $home/.ssh/authorized_keys when running this it saying no file exists...Plz give me valid answers to resolve my issue

When I write $ su - hduser , it says Authentication failure. I am using Ubuntu 12.10. Pl. help

ReplyDeletetry to use passwd command to reset hduser password and let me know.

DeleteI did it bu using $ sudo su - hduser

DeleteThanks

Shan

hi

ReplyDeletei am continuously getting the following errors . PLs help

hduser@ubuntu:~$ cd hadoop/sbin

hduser@ubuntu:~/hadoop/sbin$ ./start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-mapred.sh

hduser@ubuntu:~/hadoop/sbin$ ./start-dfs.sh

Incorrect configuration: namenode address dfs.namenode.servicerpc-address or dfs.namenode.rpc-address is not configured.

Starting namenodes on []

localhost: Error: JAVA_HOME is not set and could not be found.

localhost: Error: JAVA_HOME is not set and could not be found.

Starting secondary namenodes [0.0.0.0]

0.0.0.0: Error: JAVA_HOME is not set and could not be found.

hduser@ubuntu:~/hadoop/sbin$

Hi

DeletePlease refer to Update $HOME/.bashrc step stated above. you will need to close your terminal and open new one after update, or you can run source ./bashrc to reload the configuration again.

Hi,

ReplyDeleteI am trying to install hadoop0.23.0 in Ubuntu12.04.

I can't configure hadoop-env.sh file. And hadoop 0.23.0 doesn't has 'conf' folder....How to solve this problem...

try these hadoop1.1.1 more stable version you can configure easily use these link

Deletehttp://www.michael-noll.com/tutorials/running-hadoop-on-ubuntu-linux-single-node-cluster/

Thank you very much for your reply. But I managed to install hadoop0.22.0

DeleteHi,

ReplyDeleteWhen i try to start cluster i get the following result. It doesn't show about the starting status of job tracker and task tracker. What will be the problem...

hduser@dhivya-VPCEH26EN:~$ cd /home/hduser/hadoop

hduser@dhivya-VPCEH26EN:~/hadoop$ cd bin

hduser@dhivya-VPCEH26EN:~/hadoop/bin$ ./start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-mapred.sh

starting namenode, logging to /home/hduser/hadoop/bin/../logs/hadoop-hduser-namenode-dhivya-VPCEH26EN.out

hduser@localhost's password:

localhost: starting datanode, logging to /home/hduser/hadoop/bin/../logs/hadoop-hduser-datanode-dhivya-VPCEH26EN.out

hduser@localhost's password:

localhost: starting secondarynamenode, logging to /home/hduser/hadoop/bin/../logs/hadoop-hduser-secondarynamenode-dhivya-VPCEH26EN.out

hduser@dhivya-VPCEH26EN:~/hadoop/bin$

hey dhivya try these,

Deleteadd export HADOOP_HOME_WARN_SUPPRESS="TRUE" into hadoop-env.sh file.

Nice post. Followed the steps. Could run the job successfully. Had an issue because the user was not Sudoer, but could fix that problem. It is worth adding it as a note with a pointer to the solution using visudo. Thanks again.

ReplyDeleteYou welcome :)

DeleteHi Yahia .. thanks a lot! However I'm stuck when I start-all .. I get the following ..

ReplyDeletehduser@ubuntu-VirtualBox:~$ /home/hduser/hadoop/bin/start-all.sh

Warning: $HADOOP_HOME is deprecated.

mkdir: cannot create directory `/home/hduser/hadoop/libexec/../logs': Permission denied

chown: cannot access `/home/hduser/hadoop/libexec/../logs': No such file or directory

starting namenode, logging to /home/hduser/hadoop/libexec/../logs/hadoop-hduser-namenode-ubuntu-VirtualBox.out

/home/hduser/hadoop/bin/hadoop-daemon.sh: line 135: /home/hduser/hadoop/libexec/../logs/hadoop-hduser-namenode-ubuntu-VirtualBox.out: No such file or directory

head: cannot open `/home/hduser/hadoop/libexec/../logs/hadoop-hduser-namenode-ubuntu-VirtualBox.out' for reading: No such file or directory

localhost: mkdir: cannot create directory `/home/hduser/hadoop/libexec/../logs': Permission denied

localhost: chown: cannot access `/home/hduser/hadoop/libexec/../logs': No such file or directory

localhost: starting datanode, logging to /home/hduser/hadoop/libexec/../logs/hadoop-hduser-datanode-ubuntu-VirtualBox.out

localhost: /home/hduser/hadoop/bin/hadoop-daemon.sh: line 135: /home/hduser/hadoop/libexec/../logs/hadoop-hduser-datanode-ubuntu-VirtualBox.out: No such file or directory

localhost: head: cannot open `/home/hduser/hadoop/libexec/../logs/hadoop-hduser-datanode-ubuntu-VirtualBox.out' for reading: No such file or directory

localhost: mkdir: cannot create directory `/home/hduser/hadoop/libexec/../logs': Permission denied

localhost: chown: cannot access `/home/hduser/hadoop/libexec/../logs': No such file or directory

localhost: starting secondarynamenode, logging to /home/hduser/hadoop/libexec/../logs/hadoop-hduser-secondarynamenode-ubuntu-VirtualBox.out

localhost: /home/hduser/hadoop/bin/hadoop-daemon.sh: line 135: /home/hduser/hadoop/libexec/../logs/hadoop-hduser-secondarynamenode-ubuntu-VirtualBox.out: No such file or directory

localhost: head: cannot open `/home/hduser/hadoop/libexec/../logs/hadoop-hduser-secondarynamenode-ubuntu-VirtualBox.out' for reading: No such file or directory

mkdir: cannot create directory `/home/hduser/hadoop/libexec/../logs': Permission denied

chown: cannot access `/home/hduser/hadoop/libexec/../logs': No such file or directory

starting jobtracker, logging to /home/hduser/hadoop/libexec/../logs/hadoop-hduser-jobtracker-ubuntu-VirtualBox.out

/home/hduser/hadoop/bin/hadoop-daemon.sh: line 135: /home/hduser/hadoop/libexec/../logs/hadoop-hduser-jobtracker-ubuntu-VirtualBox.out: No such file or directory

head: cannot open `/home/hduser/hadoop/libexec/../logs/hadoop-hduser-jobtracker-ubuntu-VirtualBox.out' for reading: No such file or directory

localhost: mkdir: cannot create directory `/home/hduser/hadoop/libexec/../logs': Permission denied

localhost: chown: cannot access `/home/hduser/hadoop/libexec/../logs': No such file or directory

localhost: starting tasktracker, logging to /home/hduser/hadoop/libexec/../logs/hadoop-hduser-tasktracker-ubuntu-VirtualBox.out

localhost: /home/hduser/hadoop/bin/hadoop-daemon.sh: line 135: /home/hduser/hadoop/libexec/../logs/hadoop-hduser-tasktracker-ubuntu-VirtualBox.out: No such file or directory

localhost: head: cannot open `/home/hduser/hadoop/libexec/../logs/hadoop-hduser-tasktracker-ubuntu-VirtualBox.out' for reading: No such file or directory

Can you please help me out with this. Thanks

Did you apply chmod step ?

ReplyDeleteThanks man! The best! ;)

ReplyDeleteBut there still seems to be a problem. When I type jps this is all I get

5994 SecondaryNameNode

6066 JobTracker

6326 Jps

Can you help me out with what's wrong?

Hi Nabeel

DeleteTry to use ./start-all.sh and let me know the result.

CAN YOU POST HOW TO LAUNCH HADOOP ON MULTI NODES LIKE 8 NODES..WAITING FOR YOUR EARLIEST REPLY..

ReplyDeleteI will try hard to post the multinode installation soon.

DeleteHi,

ReplyDeleteFirst of all, thank you for such a brilliant description. I followed your entire tutorial, and everything went fine till the end, but when I tried the last instruction to run 'pi', it says 'hadoop:command not found'

can you please help me out with this?

Hi Karthik

DeleteYou welcome :)

Can you please send me the command you are using to run the pi example ?

Here is the command and the error.. From the comments it looks as if I have set the java home wrong, but even after verifying that, I am unable to resolve the error

Deletehduser@ubuntu:~/hadoop/bin$ /home/hduser/hadoop/bin/hadoop hadoop-0.20.2-examples.jar pi 3 10

Exception in thread "main" java.lang.NoClassDefFoundError: hadoop-0/20/2-examples/jar

Caused by: java.lang.ClassNotFoundException: hadoop-0.20.2-examples.jar

at java.net.URLClassLoader$1.run(URLClassLoader.java:202)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:190)

at java.lang.ClassLoader.loadClass(ClassLoader.java:306)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:301)

at java.lang.ClassLoader.loadClass(ClassLoader.java:247)

Could not find the main class: hadoop-0.20.2-examples.jar. Program will exit.

just run the following command (assuming that you are in hadoop/bin folder):

Deletehadoop jar hadoop-0.20.2-examples.jar pi 3 10

word count example runs fine from the already given wordcount.java. but i am having problem for compiling it by myself and then creating jar file and then running it.

ReplyDeletecan u kindly brief me hot to run a map reduce job. even if its word count but not from the already given jar and wordcount along with hadoop

You can use maven to compile the word count. These are the entries to import the hadoop dependencies

Deleteorg.apache.hadoop

hadoop-core

0.20.2

Hi Yahia Zakaria,

ReplyDeleteI am very new to this Hadoop. I not even know what it is for, why it is for ?. I downloaded hadoop and followed ur steps in ubuntu. Everything is succeded I think so, If not pls check the below info which I got. Now my problem is after all doing this, How will I open the Hadoop framework and how to work on that. Pls guide me. Thanks in advance.

hduser@mpower-desktop:~/hadoop/bin$ ./start-all.sh

starting namenode, logging to /home/hduser/hadoop/bin/../logs/hadoop-hduser-namenode-mpower-desktop.out

hduser@localhost's password:

localhost: starting datanode, logging to /home/hduser/hadoop/bin/../logs/hadoop-hduser-datanode-mpower-desktop.out

hduser@localhost's password:

localhost: starting secondarynamenode, logging to /home/hduser/hadoop/bin/../logs/hadoop-hduser-secondarynamenode-mpower-desktop.out

starting jobtracker, logging to /home/hduser/hadoop/bin/../logs/hadoop-hduser-jobtracker-mpower-desktop.out

hduser@localhost's password:

localhost: starting tasktracker, logging to /home/hduser/hadoop/bin/../logs/hadoop-hduser-tasktracker-mpower-desktop.out

hduser@mpower-desktop:~/hadoop/bin$

Thanks,

Abdur Rahmaan M

Hi Abdurahman

DeleteYou can run these commands under root privileges.

hI Yahia , um facing a problem that hadoop can't find my class how can i fix it?

ReplyDeleteHi L.Wajeeta

DeleteSorry I did not understand your question. can you please provide more details ?

Hi,

ReplyDeleteHow can I establish a multi node cluster in a single desktop-ubuntu.

Hi

DeleteI will try to make another post for multinode cluster in near future.

This article is quite detailed. I love it. Nonetheless, I’ve got a problem. Each time I start hadoop with the ./start-dfs.sh or ./start-all.sh command, I’m being prompted to input the root’s password which I do not have. Have you ever encountered this? Have you any solution to this? Thank you.

ReplyDeleteThanks for your feedback. Did you try to put permissions to your user on the hadoop installation folder ?

DeleteThanks Fashola, you are welcome :)

ReplyDeleteActually you have two options:

Either 1. run all hadoop commands using sudo user

Or 2. give permissions on the hadoop folder to your user (using chmod command).

This comment has been removed by the author.

ReplyDeleteThanks, after a frustrating fight with 23.6 release I downloaded 20.2 and followed ur instrcutions, took only 15 mins to get entire thing setup...

ReplyDeleteMany Thanks

You are most welcome :)

DeleteHi,

ReplyDeleteI m getting the following error while formatting name node.

hadoop@ubuntu:~$ hadoop namenode -format

Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/hadoop/hdfs/server/namenode/NameNode

Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.hdfs.server.namenode.NameNode

at java.net.URLClassLoader$1.run(URLClassLoader.java:217)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:205)

at java.lang.ClassLoader.loadClass(ClassLoader.java:321)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:294)

at java.lang.ClassLoader.loadClass(ClassLoader.java:266)

Could not find the main class: org.apache.hadoop.hdfs.server.namenode.NameNode. Program will exit.

Thanks..

Hi

DeleteDid you set HADOOP_HOME variable ?

Been trying to install hadoop forever.Finally got to install it,but

ReplyDeleteIm getting the following error when i try to run the Pi 3 10 example:

Exception in thread "main" java.io.IOException: Permission denied

at java.io.UnixFileSystem.createFileExclusively(Native Method)

at java.io.File.checkAndCreate(File.java:1705)

at java.io.File.createTempFile0(File.java:1726)

at java.io.File.createTempFile(File.java:1803)

at org.apache.hadoop.util.RunJar.main(RunJar.java:115)

1. datanode is not starting

ReplyDeleteWhen I use jps, I donot see datanode

2. When I run pi 3 10, the exanmple you have mentioned in your webpage, I get following error

WARN hdfs.DFSClient:Datastreamer Exception org.Apache.hadoop.ipc RemoteException: File /usr/hduser/PiEstimator_Tmp_3_141592654|in part0 could only be replaced to 0 nodes, instead of 1

Kindly help. I am using hadoop-1.0.4 in Ubuntu 12.10

HI.. i have the same problem when i use jps i can't see datanote... and in the tuturial doesn't appear to.. somebody know the reason?

ReplyDeletethanks

Hi

DeleteDid you try to stop all the services and up it again ? if you still encountering the same problem, please send us the log files.

hi.. Yahia it works perfectly..

Delete1. i just want ask you something else..i am new in this word of hadoop

may you recomended a page with examples with this tecnology .. example with Map Reduce..

and the second question is do you know a recognizer the video to texto (in java or whatever languange.) i need to implement one in hadoop.

Thanks a lot

While running wordcount example in pseudo mode I face problem. Is it necessary to delete tmp folder in hdfs every time I complete a job.

ReplyDeleteI am getting following error while running the example:

hduser@sush-comp:/usr/local/hadoop$ bin/hadoop jar hadoop-examples-1.0.4.jar wordcount input output

Warning: $HADOOP_HOME is deprecated.

08/01/01 07:02:40 INFO input.FileInputFormat: Total input paths to process : 1

08/01/01 07:02:40 INFO util.NativeCodeLoader: Loaded the native-hadoop library

08/01/01 07:02:40 WARN snappy.LoadSnappy: Snappy native library not loaded

08/01/01 07:02:41 INFO mapred.JobClient: Running job: job_200801010656_0001

08/01/01 07:02:42 INFO mapred.JobClient: map 0% reduce 0%

08/01/01 07:02:47 INFO mapred.JobClient: Task Id : attempt_200801010656_0001_m_000002_0, Status : FAILED

Error initializing attempt_200801010656_0001_m_000002_0:

java.io.IOException: Exception reading file:/TMP/mapred/local/ttprivate/taskTracker/hduser/jobcache/job_200801010656_0001/jobToken

at org.apache.hadoop.security.Credentials.readTokenStorageFile(Credentials.java:135)

at org.apache.hadoop.mapreduce.security.TokenCache.loadTokens(TokenCache.java:165)

at org.apache.hadoop.mapred.TaskTracker.initializeJob(TaskTracker.java:1181)

at org.apache.hadoop.mapred.TaskTracker.localizeJob(TaskTracker.java:1118)

at org.apache.hadoop.mapred.TaskTracker$5.run(TaskTracker.java:2430)

at java.lang.Thread.run(Thread.java:722)

Caused by: java.io.FileNotFoundException: File file:/TMP/mapred/local/ttprivate/taskTracker/hduser/jobcache/job_200801010656_0001/jobToken does not exist.

at org.apache.hadoop.fs.RawLocalFileSystem.getFileStatus(RawLocalFileSystem.java:397)

at org.apache.hadoop.fs.FilterFileSystem.getFileStatus(FilterFileSystem.java:251)

at org.apache.hadoop.fs.ChecksumFileSystem$ChecksumFSInputChecker.(ChecksumFileSystem.java:125)

at org.apache.hadoop.fs.ChecksumFileSystem.open(ChecksumFileSystem.java:283)

at org.apache.hadoop.fs.FileSystem.open(FileSystem.java:427)

at org.apache.hadoop.security.Credentials.readTokenStorageFile(Credentials.java:129)

... 5 more

Hi Shan

DeleteAre you sure that you have uploaded your input data to the HDFS ?

Hi Yahia,

DeleteI followed Definitive Giude in modifying core-site.xml for pseudo single node operation. After namenode -format, a file /tmp/user/data-hadoop/dfs/name was built. I transferred my data into name folder as created and was able to run the WordCount successfully. I request you to reconsider changing core-site.xml as per

fs.default.name

hdfs://localhost/

Hi Yahia...thanks for such wonderful explanation..I want to know How can I establish a multi node cluster in a single desktop-ubuntu.

ReplyDeleteHi Rashami

DeleteYou are most welcome :) actually I did not have time yet to write a post on Hadoop multi-node setup, however, it is pretty simple, you can follow these configurations steps here

http://hadoop.apache.org/docs/r1.0.4/cluster_setup.html

Hi Yahia, We are waiting actually for your new post on multinode setup. Or suggest us some links which you have gone through for the same. Really we would be appreciate if you help us. Thanks. Waiting for ur reply

ReplyDeleteHi Abdur Rahmaan

DeleteYou are most welcome :) actually I did not have time yet to write a post on Hadoop multi-node setup, however, it is pretty simple, you can follow these configurations steps here

http://hadoop.apache.org/docs/r1.0.4/cluster_setup.html

Thanks a lot. I had already gone through the same link, but it was confusing and they are not very clear. Ok however, I am trying to install multinode by own. Thanks Yahia

DeleteI am beginner

ReplyDelete1. i just want ask you something else..i am new in this word of hadoop

may you recomended a page with examples with this tecnology .. example with Map Reduce..

and the second question is do you know a recognizer the video to texto (in java or whatever languange.) i need to implement one in hadoop.

Thanks a lot

Hi,

ReplyDeleteI have installed Hadoop-1.0.4 on top of JDK7. I am getting below error while formatting namenode.Can you please help me to get rid of this.Thanks for your support!!

hdfs@ubuntu:~$ /home/hdfs/hadoop/bin/hadoop namenode -format

/home/hdfs/hadoop/bin/hadoop: line 320: /usr/java/java-7-openjdk-amd64/bin/java: No such file or directory

/home/hdfs/hadoop/bin/hadoop: line 390: /usr/java/java-7-openjdk-amd64/bin/java: No such file or directory

I have set $JAVA_HOME incorrect. I changed it and namdenode formatted fine. Thanks!!

DeleteWhen I try to submit a job to HDFS using hadoop command...I get the following error

ReplyDeleteHAdoop:Command Not found.

My Namenode works fine.

I have installed Hadoop 1.0.4 on ubuntu 12.10.

the command is hadoop (not HAdoop). It is case sensitive. If still did not work, make sure that HADOOP_HOME is set correctly.

DeleteHi,

ReplyDeleteI have installed hadoop 0.20.2 on windows7, jobtracker is running But im getting Hadoop:Command not found Error, while trying to format HDFS file system

Thanks in Advance

Suresh

I did not try installing Hadoop on windows 7 before, but I think you should adjust the PATH environment variable to include Hadoop installation directory (hadoop/bin folder).

DeleteHello Yahia ,

ReplyDeleteI tried running ./start-all.sh using both root and hduser but I end up with this permission denied thing.

hduser@ubuntu:~/hadoop/bin$ ./start-all.sh

Warning: $HADOOP_HOME is deprecated.

chown: changing ownership of `/home/hduser/hadoop/libexec/../logs': Operation not permitted

starting namenode, logging to /home/hduser/hadoop/libexec/../logs/hadoop-hduser-namenode-ubuntu.out

/home/hduser/hadoop/bin/hadoop-daemon.sh: line 136: /home/hduser/hadoop/libexec/../logs/hadoop-hduser-namenode-ubuntu.out: Permission denied

head: cannot open `/home/hduser/hadoop/libexec/../logs/hadoop-hduser-namenode-ubuntu.out' for reading: No such file or directory

hduser@localhost's password:

localhost: Permission denied, please try again.

hduser@localhost's password:

localhost: Permission denied, please try again.

hduser@localhost's password:

localhost: Permission denied (publickey,password).

hduser@localhost's password:

localhost: chown: changing ownership of `/home/hduser/hadoop/libexec/../logs': Operation not permitted

localhost: starting secondarynamenode, logging to /home/hduser/hadoop/libexec/../logs/hadoop-hduser-secondarynamenode-ubuntu.out

localhost: /home/hduser/hadoop/bin/hadoop-daemon.sh: line 136: /home/hduser/hadoop/libexec/../logs/hadoop-hduser-secondarynamenode-ubuntu.out: Permission denied

localhost: head: cannot open `/home/hduser/hadoop/libexec/../logs/hadoop-hduser-secondarynamenode-ubuntu.out' for reading: No such file or directory

chown: changing ownership of `/home/hduser/hadoop/libexec/../logs': Operation not permitted

starting jobtracker, logging to /home/hduser/hadoop/libexec/../logs/hadoop-hduser-jobtracker-ubuntu.out

/home/hduser/hadoop/bin/hadoop-daemon.sh: line 136: /home/hduser/hadoop/libexec/../logs/hadoop-hduser-jobtracker-ubuntu.out: Permission denied

head: cannot open `/home/hduser/hadoop/libexec/../logs/hadoop-hduser-jobtracker-ubuntu.out' for reading: No such file or directory

hduser@localhost's password: hduser@ubuntu:~/hadoop/bin$

localhost: Permission denied, please try again.

hduser@localhost's password:

localhost: Permission denied, please try again.

hduser@localhost's password:

localhost: Permission denied (publickey,password).

hduser@ubuntu:~/hadoop/bin$

I changed the ownership of hadoop directory to hduser. Can you please tell me what to do , if you see something missing ?

Regards,

Ravi

Hi Ravi

DeleteI think you have installed hadoop on the root folder, consequently, hduser don't have permissions to access this folder. You have two options, either re-install hadoop on any folder in hduser's home or you can login as a root and grant privileges to hduser on your root hadoop installation folder.

Hi Yahia,

ReplyDeleteI am using CDH4(YARN) pseudo mode. While updating the software, the namenode and secondarynamenode seem to have got corrupted. They are not starting after formating. Rest nodes are starting.

I am getting following error:

sush@sush-desktop:~$ for svc in /etc/init.d/hadoop-hdfs-* ; do sudo $svc start ; done

* Starting Hadoop datanode:

starting datanode, logging to /var/log/hadoop-hdfs/hadoop-hdfs-datanode-sush-desktop.out

* Starting Hadoop namenode:

bash: line 0: cd: /var/lib/hdfs/: No such file or directory

* Starting Hadoop secondarynamenode:

bash: line 0: cd: /var/lib/hdfs/: No such file or directory

I have deleted /tmp/ and formatted namenode. but still its not working. Pl. help.

Hi Shan

DeleteMisfortune, I did not try CDH4 before. I hope you already got a solution to your problem.

hey man i want to discuss in detail the problems that i m encountering i have followed ur blog each and every step but still i m having problem woth this hadoop so if u can please give me ur gmail id so that we can chat and resolve this issue or ur contact no

ReplyDeleteHi Madhav

DeleteYou are welcome :) can you please post your problems here and discuss them as your problems can be beneficial for other readers.

Thanks

This comment has been removed by the author.

ReplyDeleteI am running hadoop 1.1.2 in Mac OS X single node cluster

ReplyDeleteon running

bin/start-all.sh

3914 JobTracker

3777 NameNode

4624 Jps

why is are these the only things that are up?

P.S. I am extremely new at this just setting up and configuring for now..Plz advice

Hi Vaibhav

Deletecan you please post the logs you have ?

Hello Yahia ,

ReplyDeleteI tried running ./start-all.sh using both root and hduser but I end up with this permission denied thing.

/home/hduser/hadoop/bin$ ./start-all.sh

mkdir: cannot create directory ‘/home/hduser/hadoop/libexec/../logs’: Permission denied

chown: cannot access ‘/home/hduser/hadoop/libexec/../logs’: No such file or directory

starting namenode, logging to /home/hduser/hadoop/libexec/../logs/hadoop-pankajsingh-namenode-ubuntu.out

/home/hduser/hadoop/bin/hadoop-daemon.sh: line 136: /home/hduser/hadoop/libexec/../logs/hadoop-pankajsingh-namenode-ubuntu.out: No such file or directory

head: cannot open ‘/home/hduser/hadoop/libexec/../logs/hadoop-pankajsingh-namenode-ubuntu.out’ for reading: No such file or directory

pankajsingh@localhost's password:

localhost: mkdir: cannot create directory ‘/home/hduser/hadoop/libexec/../logs’: Permission denied

localhost: chown: cannot access ‘/home/hduser/hadoop/libexec/../logs’: No such file or directory

localhost: starting datanode, logging to /home/hduser/hadoop/libexec/../logs/hadoop-pankajsingh-datanode-ubuntu.out

localhost: /home/hduser/hadoop/bin/hadoop-daemon.sh: line 136: /home/hduser/hadoop/libexec/../logs/hadoop-pankajsingh-datanode-ubuntu.out: No such file or directory

localhost: head: cannot open ‘/home/hduser/hadoop/libexec/../logs/hadoop-pankajsingh-datanode-ubuntu.out’ for reading: No such file or directory

Hi Pankaj

Deletedid you login by hduser ?

Hi Yahia,

DeleteYes , I looged as hduser but still not working

This time I am trying to format agian and seeing this error

$/home/hduser/hadoop/bin/hadoop namenode -format

bash: /home/hduser/hadoop/bin/hadoop : permission denied

and if i am trying this

sudo $/home/hduser/hadoop/bin/hadoop namenode -format

then its showing below message

hduser is not in the sudoers file. This incident will be reported.

Is hduser need to have cpability of sudoer if yes then how I can make it as sudoer ?

Hi Pankaj

DeleteNo need to make hduser a root or sudoer. hduser has full permissions to its home folder. Did you execute chmod command ?

yes, I have applied chmod command

Deletehduser@ubuntu:~$ chmod 755 /home/hduser/

and then trying to access below location but not able to do this

hduser@ubuntu:~$ cd /home/hduser/hadoop

bash: cd: /home/hduser/hadoop: Permission denied

Hi Yahia,

DeleteNow i have fixed the problem and run ./start-all.sh command successfully. Now I have run jps command like below

hduser@ubuntu:~/hadoop/bin$ jps

9968 Jps

only above result is showing .Its not showings NameNode,Jobtracker,Tasktracker etc like your last screen.

Can you please send the logs you have ?

DeleteHi Yahia,

DeleteHere is log from all files

datanode

2013-07-22 08:57:09,895 FATAL org.apache.hadoop.conf.Configuration: error parsing conf file: org.xml.sax.SAXParseException; systemId: file:/home/hduser/hadoop/conf/core-site.xml; lineNumber: 8; columnNumber: 2; The markup in the document following the root element must be well-formed.

2013-07-22 08:57:09,923 ERROR org.apache.hadoop.hdfs.server.datanode.DataNode: java.lang.RuntimeException: org.xml.sax.SAXParseException; systemId: file:/home/hduser/hadoop/conf/core-site.xml; lineNumber: 8; columnNumber: 2; The markup in the document following the root element must be well-formed.

jobtracker

2013-07-22 08:57:27,130 FATAL org.apache.hadoop.conf.Configuration: error parsing conf file: org.xml.sax.SAXParseException; systemId: file:/home/hduser/hadoop/conf/core-site.xml; lineNumber: 8; columnNumber: 2; The markup in the document following the root element must be well-formed.

2013-07-22 09:28:03,432 FATAL org.apache.hadoop.conf.Configuration: error parsing conf file: org.xml.sax.SAXParseException; systemId: file:/home/hduser/hadoop/conf/core-site.xml; lineNumber: 8; columnNumber: 2; The markup in the document following the root element must be well-formed.

namenode

2013-07-22 08:57:07,893 FATAL org.apache.hadoop.conf.Configuration: error parsing conf file: org.xml.sax.SAXParseException; systemId: file:/home/hduser/hadoop/conf/core-site.xml; lineNumber: 8; columnNumber: 2; The markup in the document following the root element must be well-formed.

2013-07-22 08:57:07,893 ERROR org.apache.hadoop.hdfs.server.namenode.NameNode: java.lang.RuntimeException: org.xml.sax.SAXParseException; systemId: file:/home/hduser/hadoop/conf/core-site.xml; lineNumber: 8; columnNumber: 2; The markup in the document following the root element must be well-formed.

tasktracker

2013-07-22 09:28:07,082 FATAL org.apache.hadoop.conf.Configuration: error parsing conf file: org.xml.sax.SAXParseException; systemId: file:/home/hduser/hadoop/conf/core-site.xml; lineNumber: 8; columnNumber: 2; The markup in the document following the root element must be well-formed.

2013-07-22 09:28:07,082 ERROR org.apache.hadoop.mapred.TaskTracker: Can not start task tracker because java.lang.RuntimeException: org.xml.sax.SAXParseException; systemId: file:/home/hduser/hadoop/conf/core-site.xml; lineNumber: 8; columnNumber: 2; The markup in the document following the root element must be well-formed.

Secondarynamenode

2013-07-22 08:57:25,954 FATAL org.apache.hadoop.conf.Configuration: error parsing conf file: org.xml.sax.SAXParseException; systemId: file:/home/hduser/hadoop/conf/core-site.xml; lineNumber: 8; columnNumber: 2; The markup in the document following the root element must be well-formed.

it seems the core-site.xml file is not wellformed. Can you please open this file in the line number 8 and check the problem ? please keep posted if you solved this problem.

DeleteHi Yahia,

DeleteThanks for your support and now I have done with hadoop installation part.

Now what is the next step ? I means could you please help me to do a sample project so that I can do some exercise.

Hey Yahia ,

ReplyDeletePlease help me on my previous comment

Hi,