If you want to edit or modify PDF or form on Ubuntu, you may find LibreOffice is a good tool to try before investigating other tools.

Simply, open a terminal (Ctrl + Alt + T) and navigate to your PDF path. Then, type the following:

libreoffice <pdf_name.pdf>

Search This Blog

Friday, June 16, 2017

Sunday, December 7, 2014

Installing JDK 1.7 on ubuntu server

Follow the following steps to install JDK 1.7 on ubuntu server:

1. Based on your linux architecture, download the proper version from Oracle website (Oracle JDK 1.7)

sudo update-alternatives --install "/usr/bin/java" "java" "/usr/lib/jvm/jdk1.7.0_71/bin/java" 1 sudo update-alternatives --install "/usr/bin/javac" "javac" "/usr/lib/jvm/jdk1.7.0_71/bin/javac" 1 sudo update-alternatives --install "/usr/bin/javaws" "javaws" "/usr/lib/jvm/jdk1.7.0_71/bin/javaws" 1

export JAVA_HOME=/usr/lib/jvm/jdk1.7.0_71

1. Based on your linux architecture, download the proper version from Oracle website (Oracle JDK 1.7)

2. Then, uncompress the jdk archive using the following command:

tar -xvf jdk-7u65-linux-i586.tar

Or using the following command for 64 bits:

tar -xvf jdk-7u65-linux-x64.tar

3. Create a folder named jvm under (if not exists) using the following command

Or using the following command for 64 bits:

tar -xvf jdk-7u65-linux-x64.tar

3. Create a folder named jvm under (if not exists) using the following command

sudo mkdir -p /usr/lib/jvm

4. Then, move the extracted directory to /usr/lib/jvm:

4. Then, move the extracted directory to /usr/lib/jvm:

sudo mv ~/Downloads/jdk1.7.0_71 /usr/lib/jvm/

5. Run the following commands to update the execution alternatives:

5. Run the following commands to update the execution alternatives:

sudo update-alternatives --install "/usr/bin/java" "java" "/usr/lib/jvm/jdk1.7.0_71/bin/java" 1 sudo update-alternatives --install "/usr/bin/javac" "javac" "/usr/lib/jvm/jdk1.7.0_71/bin/javac" 1 sudo update-alternatives --install "/usr/bin/javaws" "javaws" "/usr/lib/jvm/jdk1.7.0_71/bin/javaws" 1

6. Finally, you need to export JAVA_HOME variable:

or it is better to set JAVA_HOME in .bashrc:

nano ~/.bashrc

then add the same line:

export JAVA_HOME=/usr/lib/jvm/jdk1.7.0_71

Saturday, June 14, 2014

How to download the source code of test jars using Gradle

I just faced a problem while trying downloading the source code of some of test jars. You just need to specify "test-sources" into your classifier.

For example:

compile group:'org.xyz', name:'your-target-main-jar', version:'jar-version', classifier: 'test-sources'

This will download your target main jar (of course with its dependencies) and its test jar associated with the source code.

Also if you are using eclipse, you will need to add the following:

eclipse {

classpath {

downloadSources=true

}

}

For example:

compile group:'org.xyz', name:'your-target-main-jar', version:'jar-version', classifier: 'test-sources'

This will download your target main jar (of course with its dependencies) and its test jar associated with the source code.

Also if you are using eclipse, you will need to add the following:

eclipse {

classpath {

downloadSources=true

}

}

Labels:

eclipse,

gradle,

software project management

Tuesday, November 26, 2013

Installing VMWare Player on Ubuntu 13.04

I did not find a way to install VMWare player on Ubuntu 13.04 - 64 bits. But, I found an archived site having a VMWare player 6.0.0 for ubuntu 64-bits.

To install VMWare 6.0.0, we need to install install VMWare 5.0.3 first and then the automatic update will do the rest for 6.0.0 version. To do that, open a terminal and run the following commands:

sudo apt-get install build-essential linux-headers-`uname -r`

mkdir ~/VMware

cd ~/VMware

wget -c https://softwareupdate.vmware.com/cds/vmw-desktop/player/5.0.3/1410761/linux/core/VMware-Player-5.0.3-1410761.x86_64.bundle.tar

tar -xvf VMware-Player-5.0.3-1410761.x86_64.bundle.tar

To install VMWare 6.0.0, we need to install install VMWare 5.0.3 first and then the automatic update will do the rest for 6.0.0 version. To do that, open a terminal and run the following commands:

sudo apt-get install build-essential linux-headers-`uname -r`

mkdir ~/VMware

cd ~/VMware

wget -c https://softwareupdate.vmware.com/cds/vmw-desktop/player/5.0.3/1410761/linux/core/VMware-Player-5.0.3-1410761.x86_64.bundle.tar

tar -xvf VMware-Player-5.0.3-1410761.x86_64.bundle.tar

chmod +x VMware-Player-5.0.3-1410761.x86_64.bundle

sudo ./VMware-Player-5.0.3-1410761.x86_64.bundle

Then after the installation, you can type "VMWare" in the start menu then it will ask you to upgrade to VMWare 6.0.0 version.

Saturday, May 19, 2012

Installing Hadoop on Ubuntu (Linux) - single node - Problems you may face

This is not a new post, it is based on Michael G. Noll blog about Running Hadoop on Ubuntu (Single Node)

I will go through the same steps, but I will point out some exceptions/errors you may face.

Because I am a very new user of Ubuntu, this post is mainly targeting the Windows users and they have very primitive knowledge about Linux. I may write some hints in linux which seems very trivial for linux geeks, but it may be fruitful for Windows users.

Moreover, I am assuming that you have enough knowledge about HDFS architecture. You can read this document for more details.

I have used Ubuntu 11.04 and Hadoop 0.20.2.

1. Installing Sun JDK 1.6: Installing JDK is a required step to install Hadoop. You can follow the steps in my previous post.

Update

There is another simpler way to install JDK (for example installing JDK 1.7) using the instructions on this post.

$sudo addgroup hadoop

$su - hduser

$ssh-keygen -t rsa -P ""

To be sure that SSH installation is went well, you can open a new terminal and try to create ssh session using hduser by the following command:

$ssh localhost

4. Disable IPv6: You will need to disable IP version 6 because Ubuntu is using 0.0.0.0 IP for different Hadoop configurations. You will need to run the following commands using a root account:

$sudo gedit /etc/sysctl.conf

I will go through the same steps, but I will point out some exceptions/errors you may face.

Because I am a very new user of Ubuntu, this post is mainly targeting the Windows users and they have very primitive knowledge about Linux. I may write some hints in linux which seems very trivial for linux geeks, but it may be fruitful for Windows users.

Moreover, I am assuming that you have enough knowledge about HDFS architecture. You can read this document for more details.

I have used Ubuntu 11.04 and Hadoop 0.20.2.

Prerequisites:

Update

There is another simpler way to install JDK (for example installing JDK 1.7) using the instructions on this post.

2. Adding a dedicated Hadoop system user: You will need a user for hadoop system you will install. To create a new user "hduser" in a group called "hadoop", run the following commands in your terminal:

$sudo addgroup hadoop

$sudo adduser --ingroup hadoop hduser

3.Configuring SSH: in Michael Blog, he assumed that the SSH is already installed. But if you didn't install SSH server before, you can run the following command in your terminal: By this command, you will have installed ssh server on your machine, the port is 22 by default.

3.Configuring SSH: in Michael Blog, he assumed that the SSH is already installed. But if you didn't install SSH server before, you can run the following command in your terminal: By this command, you will have installed ssh server on your machine, the port is 22 by default.

$sudo apt-get install openssh-server

We have installed SSH because Hadoop requires access to localhost (in case single node cluster) or communicates with remote nodes (in case multi-node cluster).

After this step, you will need to generate SSH key for hduser (and the users you need to administer Hadoop if any) by running the following commands, but you need first to switch to hduser:

$su - hduser

$ssh-keygen -t rsa -P ""

To be sure that SSH installation is went well, you can open a new terminal and try to create ssh session using hduser by the following command:

$ssh localhost

$sudo gedit /etc/sysctl.conf

This command will open sysctl.conf in text editor, you can copy the following lines at the end of the file:

#disable ipv6

net.ipv6.conf.all.disable_ipv6 = 1

net.ipv6.conf.default.disable_ipv6 = 1

net.ipv6.conf.lo.disable_ipv6 = 1

You can save the file and close it. If you faced a problem telling you don't have permissions, just remember to run the previous commands by your root account.

These steps required you to reboot your system, but alternatively, you can run the following command to re-initialize the configurations again.

$sudo sysctl -p

To make sure that IPV6 is disabled, you can run the following command:

$cat /proc/sys/net/ipv6/conf/all/disable_ipv6

The printed value should be 1, which means that is disabled.

Running an Example (Pi Example)

These steps required you to reboot your system, but alternatively, you can run the following command to re-initialize the configurations again.

$sudo sysctl -p

To make sure that IPV6 is disabled, you can run the following command:

$cat /proc/sys/net/ipv6/conf/all/disable_ipv6

The printed value should be 1, which means that is disabled.

Installing Hadoop

Now we can download Hadoop to begin installation. Go to Apache Downloads and download Hadoop version 0.20.2. To overcome the security issues, you can download the tar file in hduser directory, for example, /home/hduser. Check the following snapshot:

Then you need to extract the tar file and rename the extracted folder to 'hadoop'. Open a new terminal and run the following command:

$ cd /home/hduser

$ sudo tar xzf hadoop-0.20.2.tar.gz

$ sudo mv hadoop-0.20.2 hadoop

Please note if you want to grant access for another hadoop admin user (e.g. hduser2), you have to grant read permission to folder /home/hduser using the following command:

sudo chown -R hduser2:hadoop hadoop

Update $HOME/.bashrc

You will need to update the .bachrc for hduser (and for every user you need to administer Hadoop). To open .bachrc file, you will need to open it as root:

$sudo gedit /home/hduser/.bashrc

Then you will add the following configurations at the end of .bachrc file

# Set Hadoop-# related environment variables

export HADOOP_HOME=/home/hduser/hadoop

# Set JAVA_HOME (we will also configure JAVA_HOME directly for Hadoop later on)

export JAVA_HOME=/usr/lib/jvm/java-6-sun

# or you can write the following command if you used this post to install your java

# export JAVA_HOME=/usr/lib/jvm/jdk1.7.0_71

# or you can write the following command if you used this post to install your java

# export JAVA_HOME=/usr/lib/jvm/jdk1.7.0_71

# Some convenient aliases and functions for running Hadoop-related commands

unalias fs &> /dev/null

alias fs="hadoop fs"

unalias hls &> /dev/null

alias hls="fs -ls"

# If you have LZO compression enabled in your Hadoop cluster and

# compress job outputs with LZOP (not covered in this tutorial):

# Conveniently inspect an LZOP compressed file from the command

# line; run via:

#

# $ lzohead /hdfs/path/to/lzop/compressed/file.lzo

#

# Requires installed 'lzop' command.

#

lzohead () {

hadoop fs -cat $1 | lzop -dc | head -1000 | less

}

# Add Hadoop bin/ directory to PATH

export PATH=$PATH:$HADOOP_HOME/bin

Hadoop Configuration

Now, we need to configure Hadoop framework on Ubuntu machine. The following are configuration files we can use to do the proper configuration. To know more about hadoop configurations, you can visit this site

hadoop-env.sh

We need only to update the JAVA_HOME variable in this file. Simply you will open this file using a text editor using the following command:

$sudo gedit /home/hduser/hadoop/conf/hadoop-env.sh

Then you will need to change the following line

# export JAVA_HOME=/usr/lib/j2sdk1.5-sun

To

export JAVA_HOME=/usr/lib/jvm/java-6-sun

or you can write the following command if you used this post to install your java

# export JAVA_HOME=/usr/lib/jvm/jdk1.7.0_71

or you can write the following command if you used this post to install your java

# export JAVA_HOME=/usr/lib/jvm/jdk1.7.0_71

Note: if you faced "Error: JAVA_HOME is not set" Error while starting the services, then you seems that you forgot toe uncomment the previous line (just remove #).

core-site.xml

First, we need to create a temp directory for Hadoop framework. If you need this environment for testing or a quick prototype (e.g. develop simple hadoop programs for your personal test ...), I suggest to create this folder under /home/hduser/ directory, otherwise, you should create this folder in a shared place under shared folder (like /usr/local ...) but you may face some security issues. But to overcome the exceptions that may caused by security (like java.io.IOException), I have created the tmp folder under hduser space.

To create this folder, type the following command:

$ sudo mkdir /home/hduser/tmp

Please note that if you want to make another admin user (e.g. hduser2 in hadoop group), you should grant him a read and write permission on this folder using the following commands:

$ sudo chown hduser2:hadoop /home/hduser/tmp

$ sudo chmod 755 /home/hduser/tmp

Now, we can open hadoop/conf/core-site.xml to edit the hadoop.tmp.dir entry.

We can open the core-site.xml using text editor:

$sudo gedit /home/hduser/hadoop/conf/core-site.xml

Then add the following configurations between <configuration> .. </configuration> xml elements:

<!-- In: conf/core-site.xml -->

<property>

<name>hadoop.tmp.dir</name>

<value>/home/hduser/tmp</value>

<description>A base for other temporary directories.</description>

</property>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:54310</value>

<description>The name of the default file system. A URI whose

scheme and authority determine the FileSystem implementation. The

uri's scheme determines the config property (fs.SCHEME.impl) naming

the FileSystem implementation class. The uri's authority is used to

determine the host, port, etc. for a filesystem.</description>

</property>

mapred-site.xml

We will open the hadoop/conf/mapred-site.xml using a text editor and add the following configuration values (like core-site.xml)

<!-- In: conf/mapred-site.xml -->

<property>

<name>mapred.job.tracker</name>

<value>localhost:54311</value>

<description>The host and port that the MapReduce job tracker runs

at. If "local", then jobs are run in-process as a single map

and reduce task.

</description>

</property>

hdfs-site.xml

Open hadoop/conf/hdfs-site.xml using a text editor and add the following configurations:

<!-- In: conf/hdfs-site.xml -->

<property>

<name>dfs.replication</name>

<value>1</value>

<description>Default block replication.

The actual number of replications can be specified when the file is created.

The default is used if replication is not specified in create time.

</description>

</property>

Formatting NameNode

You should format the NameNode in your HDFS. You should not do this step when the system is running. It is usually done once at first time of your installation.

Run the following command

$/home/hduser/hadoop/bin/hadoop namenode -format

|

| NameNode Formatting |

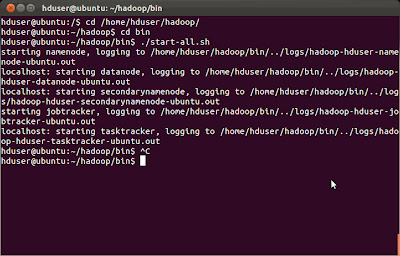

Starting Hadoop Cluster

You will need to navigate to hadoop/bin directory and run ./start-all.sh script.

|

| Starting Hadoop Services using ./start-all.sh |

There is a nice tool called jps. You can use it to ensure that all the services are up.

|

| Using jps tool |

Running an Example (Pi Example)

There are many built-in examples. We can run PI estimator example using the following command:

hduser@ubuntu:~/hadoop/bin$ hadoop jar ../hadoop-0.20.2-examples.jar pi 3 10

If you faced "Incompatible namespaceIDs" Exception you can do the following:

1. Stop all the services (by calling ./stop-all.sh).

2. Delete

/tmp/hadoop/dfs/data/*

3. Start all the services.

Sunday, May 13, 2012

Java Performance Tips 2

Introduction

We

talked about Strings operations in the last article, now we will talk about

more tips on performance tips, we will talk about the importance of creating

stateless methods and class if your logic doesn't depend on the state of the

object, also we will talk about object reusing and how will affect the

performance by reducing the headache of garbage collection.

Take

'State or Stateless' Decision

Creating

and destroying objects issue in java can cause performance issues, for example

creating State classes for stateless data; a stateless class means that the

data of the created object doesn't depend on the state where there is no fields

or attributes that makes an object differs from other.

We can imagine that the Stateless class can say 'Use

me only if your code doesn't depend on your object state, All the objects are

functionally equivalent to me, you will gain besides that no creation of

objects, the CPU will be happy of doing that J'

Code Example

The following StatelessClassWithoutStaticMethods class has a

method called execute, where the logic of this method doesn't depend on the

state of the object, while it is not static method.

package

performancetest.episode2;

public

class StatelessClassWithoutStaticMethods {

public

void execute()

{

// the logic here doesn't depend on

the object state

for (int

i = 0; i < 10000; i++) {

System.out.println(i);

}

}

}

While the right version as the

following:

package

performancetest.episode2;

public

class StatelessClassWithStaticMethods {

public

static void

execute()

{

// the logic here doesn't depend on

the object state

for (int

i = 0; i < 10000; i++) {

System.out.println(i);

}

}

}

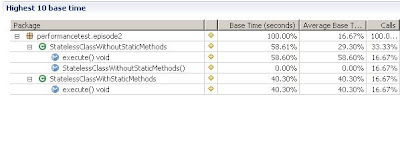

Performance Analysis

Using TBTB (Eclipse Test & Performance Tools

Platform Project), the following results are shown as follows

|

| Performance Analysis |

Based

on this figure, the static execute method has loss approximately 18% of time

from the non-static one.

That

doesn't mean that you have to make all the class a static (i.e. a class has

static methods only), but if you have a state method in this class depends on

the object state, make this method only non-static.

Don't

Create A New One If You Can What You Have

'You

can use me before I go to the garbage collection, clear my data and reuse me

again; we want to lessen the work on our friend garbage collection' Any object

says this quote J, Creating new objects is expensive as we know as more

objects we create, the garbage collection job will be more time consuming.

So if

you have a chance to reuse the object again without creating a new one of the

same type, do it immediately, it will improve the performance and will make the

garbage collection job be easier.

Code

Example

public

static void

fillVectorDataWithoutRecycling()

{

for (int

i = 0 ; i < 1000 ; i ++)

{

Vector v = new

Vector();

v.add("item1");

v.add("item2");

v.add("item3");

System.out.println(v.get(0));

}

}

public

static void

fillVectorDataWithRecycling()

{

Vector v = new

Vector();

for (int

i = 0 ; i < 1000 ; i ++)

{

v.clear();

v.add("item1");

v.add("item2");

v.add("item3");

System.out.println(v.get(0));

}

}

We have

two methods, fillVectorDataWithoutRecycling method creates a new vector object

in the loop, while fillVectorDataWithRecycling method uses one vector object

and clears the vector object and reuse it.

Performance

Analysis

|

| Performance Analysis With/Without Vector Recycling |

From

this figure we found that reusing the current object will cause loss approximately

50% of time (of course without taking in the consideration the hardware

architecture like CPU, cache and memory).

Java Performance Tips 1 - String Operations

String

Operations

There are some performance tips for String

manipulation in java, one of them is the concatenation operation.

Concatenation operation can be done by

appending the values on the same String object for example:

String str = new String ();

String s = "test";

str+=s;

String s = "test";

str+=s;

str+="testString";

The compiler translated this simple line to

the following J

str = (new

StringBuffer()).append(s).append("testString").toString();

But this method is not preferable (as we will

know later), one other method is using StringBuffer .

Using StringBuffer Method

StringBuffer is used to store character

strings that will be changed as we know that String class is immutable, so we

can concatenate the strings as follows:

StringBuffer sbuffer = new StringBuffer();

sbuffer.append("testString");

StringBuffer vs StringBuilder

Also there is another method to concatenate

the String using StringBuilder which is introduced in Java 5, StringBuilder is

like the StringBuffer except it is not synchronized, which means that if there

are many threads, they can change it in the same time (StringBuilder is not

suitable in the multithreading applications).

'Ok, why this stuff for, just for concatenate

some strings!' you may ask this question, after running a sample of each and

profiling the performance.

Code Example

public class StringOperations {

public void concatenateUsingString() {

String str = new String();

for (int i = 0; i < 10000; i++) {

str += "testString";

}

}

public void concatenateUsingStringBuffer()

{

StringBuffer sbuffer = new StringBuffer();

for (int i = 0; i < 10000; i++) {

sbuffer.append("testString");

}

}

public void concatenateUsingStringBuilder()

{

StringBuilder sbuilder = new StringBuilder();

for (int i = 0; i < 10000; i++) {

sbuilder.append("testString");

}

}

}

And in the main method, a simple calling to

the three methods

public static void main(String[] args) {

StringOperations soperations = new StringOperations();

soperations.concatenateUsingString();

soperations.concatenateUsingStringBuffer();

soperations.concatenateUsingStringBuilder();

}

I have used the Eclipse Test & Performance Tools Platform Project (TPTP) to validate the results, just right click on the project and choose 'Profile As'.

Then choose 'ProfileàProfile Configuration '

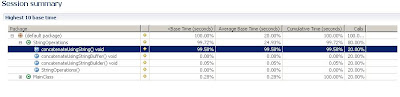

|

| Profile Configuration |

This result shows the big performance issue of using the

String concatenation (Plus operation),

the profiler tells us that the calling of concatenateUsingStringBuffer and

concatenateUsingStringBuilder (approximately 0.08%) of time are nothing with

respect to concatenateUsingString (99.85% of time).

http://www.javaworld.com/javaworld/jw-03-2000/jw-0324-javaperf.html

http://leepoint.net/notes-java/data/strings/23stringbufferetc.html

http://leepoint.net/notes-java/data/strings/23stringbufferetc.html

Subscribe to:

Comments (Atom)

![[Solved] Problem](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEje20iJAPIbIX1PZULp-EXgepO-GVsevfCugvBRpCJdV2y274BxlPRKfHM_ZzTlJpaYOk4Y9tI8Fi6qpq0qK478VAZygGgvpuTeruwbWqeehDMampdRoQozmfGmQVZq2-HR5i1IkHt5kpwT/s760/Solved.jpg)

![[Solved] Problem Hadoop Installation Ubuntu Single Node Disable IP V6 Disable IP V6](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhYCVPBzrt6hFY5WylwL0Fh_7dVug2_k_Y8GFPfB6Zb0gDDlpdLyl3TP8BQfpXasD727j359OYuC5z_sMYZsfldNElQlsBasWx14s6WA-qjhKXlFLWCKxlX4K5jbzi8xTGPJpmRl5n4fz9A/s400/Disable+IP+V6.jpg)

![[Solved] Problem Hadoop Installation Ubuntu Single Node Download Hadoop Download Hadoop](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEgL_USKV1mtsexX_R6rITh8J25A9ykH3cxpMsW7VbNSkma8-wrrT5oaSM55feu0hx5Wu7vUYs-1SoRmJL3I0jHfjli7rXAaDHRUoaS_c7INz2HxGxVUpfydE9fEQtSRMW4h56yuOkrMjUQS/s400/Download+Hadoop.jpg)